What is an OLTP Database?

OLTP stands for Online Transaction Processing. It refers to a type of database system designed to handle large numbers of small, quick operations such as insertions, updates, and deletions. These operations are often transactional in nature—for example, making a bank transfer, booking a flight ticket, or purchasing an item online.

An OLTP database focuses on speed, concurrency, and reliability, ensuring that millions of users can perform operations simultaneously without data loss or corruption.

A Brief History of OLTP Databases

- 1960s–1970s: Early database systems relied heavily on hierarchical and network models. Transaction processing was limited and often batch-oriented.

- 1970s–1980s: With the invention of relational databases (thanks to Edgar F. Codd’s relational model), OLTP became more structured and efficient.

- 1980s–1990s: As businesses expanded online, the demand for real-time transaction processing grew. Systems like IBM’s CICS (Customer Information Control System) became cornerstones of OLTP.

- 2000s–Today: Modern OLTP databases (e.g., Oracle, MySQL, PostgreSQL, SQL Server) have evolved with features like replication, clustering, and distributed transaction management to support large-scale web and mobile applications.

Main Characteristics of OLTP Databases

- High Transaction Throughput

- Capable of handling thousands to millions of operations per second.

- Optimized for small, frequent read/write queries.

- Concurrency Control

- Multiple users can access and modify data at the same time.

- Uses mechanisms like locks, isolation levels, and ACID properties.

- Real-Time Processing

- Transactions are executed instantly with immediate feedback to users.

- Data Integrity

- Enforces strict ACID compliance (Atomicity, Consistency, Isolation, Durability).

- Ensures data is reliable even in cases of system failures.

- Normalization

- OLTP databases are usually highly normalized to reduce redundancy and maintain consistency.

Key Features of OLTP Databases

- Fast Query Processing: Designed for quick response times.

- Support for Concurrent Users: Handles thousands of simultaneous connections.

- Transaction-Oriented: Focused on CRUD operations (Create, Read, Update, Delete).

- Error Recovery: Rollback and recovery mechanisms guarantee system stability.

- Security: Role-based access and encryption ensure secure data handling.

Main Components of OLTP Systems

- Database Engine

- Executes queries, manages transactions, and enforces ACID properties.

- Examples: MySQL InnoDB, PostgreSQL, Oracle Database.

- Transaction Manager

- Monitors ongoing transactions, manages concurrency, and resolves conflicts.

- Locking & Concurrency Control System

- Ensures that multiple users can work on data without conflicts.

- Backup and Recovery Systems

- Protects against data loss and ensures durability.

- User Interfaces & APIs

- Front-end applications that allow users and systems to perform transactions.

Benefits of OLTP Databases

- High Performance: Handles thousands of transactions per second.

- Reliability: ACID compliance ensures accuracy and stability.

- Scalability: Supports large user bases and can scale horizontally with clustering and replication.

- Data Integrity: Prevents data anomalies with strict consistency rules.

- Real-Time Analytics: Provides up-to-date information for operational decisions.

When and How Should We Use OLTP Databases?

- Use OLTP databases when:

- You need to manage frequent, small transactions.

- Real-time processing is essential.

- Data consistency is critical (e.g., finance, healthcare, e-commerce).

- How to use them effectively:

- Choose a relational DBMS like PostgreSQL, Oracle, SQL Server, or MySQL.

- Normalize schema design for data integrity.

- Implement indexing to speed up queries.

- Use replication and clustering for scalability.

- Regularly monitor and optimize performance.

Real-World Examples of OLTP Databases

- Banking Systems: Handling deposits, withdrawals, and transfers in real time.

- E-commerce Platforms: Managing product purchases, payments, and shipping.

- Airline Reservation Systems: Booking flights, updating seat availability instantly.

- Healthcare Systems: Recording patient check-ins, lab results, and prescriptions.

- Retail Point-of-Sale (POS) Systems: Processing sales transactions quickly.

Integrating OLTP Databases into Software Development

- Step 1: Requirement Analysis

- Identify transaction-heavy components in your application.

- Step 2: Schema Design

- Use normalized schemas to ensure consistency.

- Step 3: Choose the Right Database

- For mission-critical systems: Oracle or SQL Server.

- For scalable web apps: PostgreSQL or MySQL.

- Step 4: Implement Best Practices

- Use connection pooling, indexing, and query optimization.

- Step 5: Ensure Reliability

- Set up backups, replication, and monitoring systems.

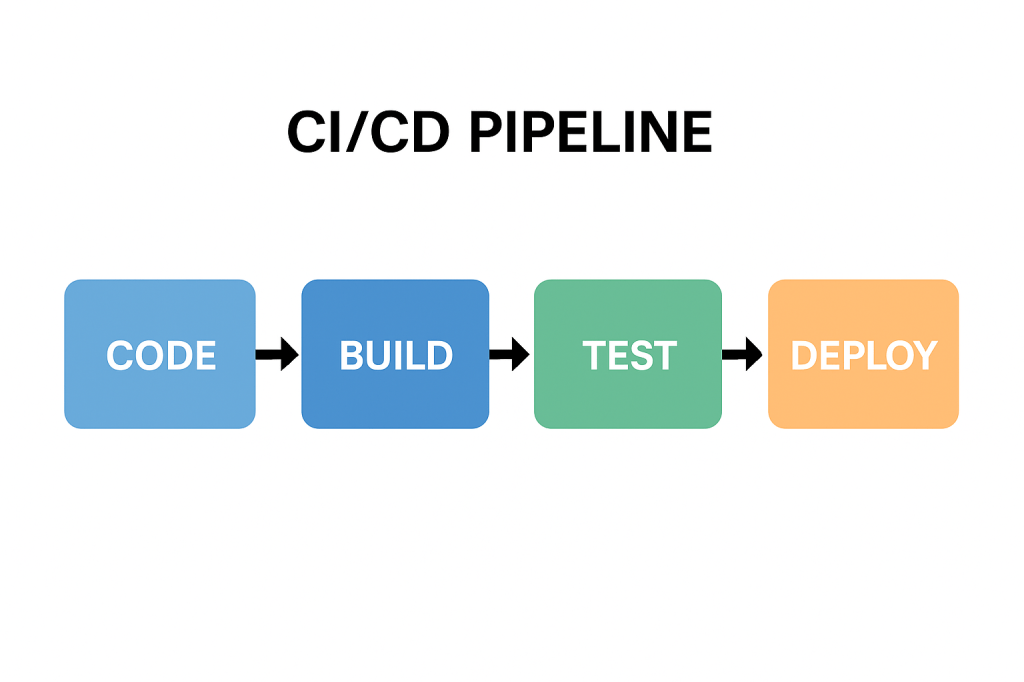

- Step 6: Continuous Integration

- Include database migrations and schema validations in your CI/CD pipeline.

Conclusion

OLTP databases are the backbone of modern transaction-driven systems. Their speed, reliability, and ability to support high volumes of concurrent users make them indispensable in industries like finance, healthcare, retail, and travel.

By understanding their history, characteristics, and integration methods, software engineers can effectively design systems that are both scalable and reliable.

Recent Comments