Quick Decision Guide

- Use

intfor primitive number crunching, counters, loops, and performance-critical code. - Use

Integerwhen you need null, work with collections/generics/Streams, use it as a map key, or interact with APIs that require objects.

What Are int and Integer?

intis a primitive 32-bit signed integer type.- Range: −2,147,483,648 to 2,147,483,647.

- Stored directly on the stack or inside objects as a raw value.

Integeris the wrapper class forint(injava.lang).- An immutable object that contains an

intvalue (or can benull). - Provides methods like

compare,parseInt(static inInteger), etc.

- An immutable object that contains an

Why Do We Have Two Types?

Java was designed with primitives for performance and memory efficiency. Later, Java introduced generics, Collections, and object-oriented APIs that need reference types. Wrapper classes (like Integer) bridge primitives and object APIs, enabling features primitives can’t provide (e.g., nullability, method parameters of type Object, use as generic type arguments).

Key Differences at a Glance

| Aspect | int (primitive) | Integer (wrapper class) |

|---|---|---|

| Nullability | Cannot be null | Can be null |

| Memory | 4 bytes for the value | Object header + 4 bytes value (+ padding) |

| Performance | Fast (no allocation) | Slower (allocation, GC, boxing/unboxing) |

| Generics/Collections | Not allowed as type parameter | Allowed: List<Integer> |

| Default value (fields) | 0 | null |

| Equality | == compares values | == compares references; use .equals() for value |

| Autoboxing | Not applicable | Works with int via autoboxing/unboxing |

| Methods | N/A | Utility & instance methods (compareTo, hashCode, etc.) |

Autoboxing & Unboxing (and the Gotchas)

Java will automatically convert between int and Integer:

Integer a = 5; // autoboxing: int -> Integer

int b = a; // unboxing: Integer -> int

Pitfall: Unboxing a null Integer throws NullPointerException.

Integer maybeNull = null;

int x = maybeNull; // NPE at runtime!

Tip: When a value can be absent, prefer OptionalInt/Optional<Integer> or check for null before unboxing.

Integer Caching (−128 to 127)

Integer.valueOf(int) caches values in [−128, 127]. This can make some small values appear identical by reference:

Integer x = 100;

Integer y = 100;

System.out.println(x == y); // true (same cached object)

System.out.println(x.equals(y)); // true

Integer p = 1000;

Integer q = 1000;

System.out.println(p == q); // false (different objects)

System.out.println(p.equals(q)); // true

Rule: Always use .equals() for value comparison with wrappers.

When to Use int

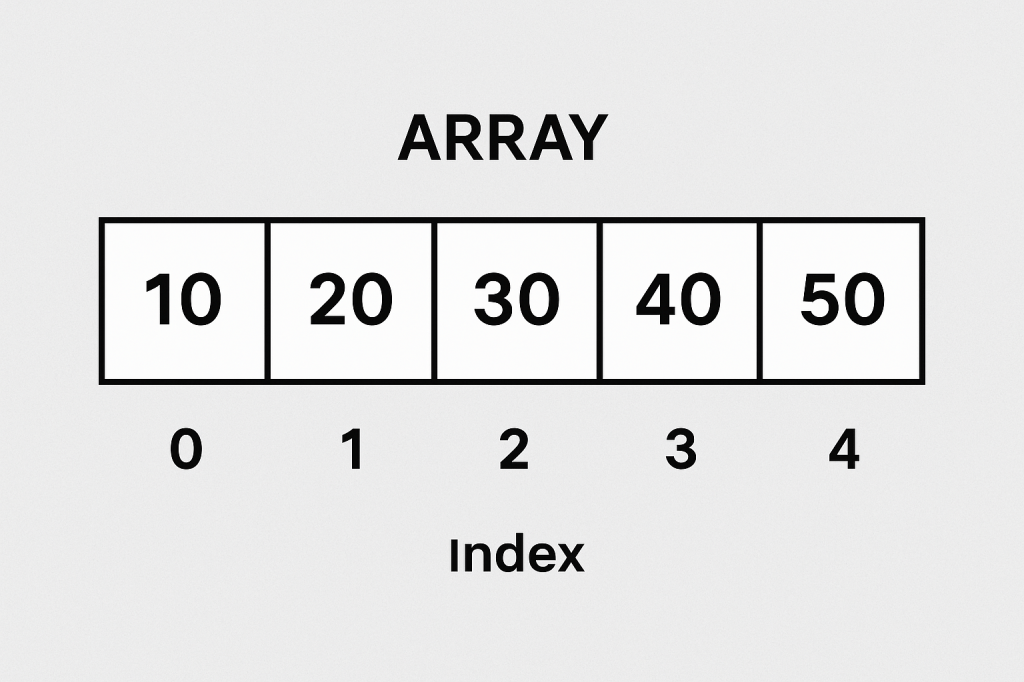

- Counters, indices, arithmetic in tight loops.

- Performance-critical code paths to avoid allocation/GC.

- Fields that are always present (never absent) and don’t need object semantics.

- Switch statements and bit-level operations.

Example:

int sum = 0;

for (int i = 0; i < nums.length; i++) {

sum += nums[i];

}

When to Use Integer

- Collections/Generics/Streams require reference types:

List<Integer> scores = List.of(10, 20, 30);

- Nullable numeric fields (e.g., optional DB columns, partially populated DTOs).

- Map keys or values where object semantics and

equals/hashCodematter:

Map<Integer, String> userById = new HashMap<>();

- APIs that expect

Objector reflection/serialization frameworks.

Benefits of Each

Benefits of int

- Speed & low memory footprint.

- No

NullPointerExceptionfrom unboxing. - Straightforward arithmetic.

Benefits of Integer

- Nullability to represent “unknown/missing”.

- Works with Collections, Generics, Streams.

- Provides utility methods and can be used in APIs requiring objects.

- Immutability makes it safe as a map key.

When Not to Use Them

- Avoid

Integerin hot loops or large arrays where performance/memory is critical (boxing creates many objects).- Prefer

int[]overList<Integer>when possible.

- Prefer

- Avoid

intwhen a value might be absent or needs to live in a collection or generic API. - Beware of unboxing nulls—if a value can be null, don’t immediately unbox to

int.

Practical Patterns

1) DTO with Optional Field

class ProductDto {

private Integer discountPercent; // can be null if no discount

// getters/setters

}

2) Streams: Primitive vs Boxed

int sum = IntStream.of(1, 2, 3).sum(); // primitive stream: fast

int sum2 = List.of(1, 2, 3).stream()

.mapToInt(Integer::intValue)

.sum(); // boxed -> primitive

3) Safe Handling of Nullable Integer

Integer maybe = fetchCount(); // might be null

int count = (maybe != null) ? maybe : 0; // avoid NPE

4) Overloads & Method Selection

If you provide both:

void setValue(int v) { /* ... */ }

void setValue(Integer v) { /* ... */ }

- Passing a literal (

setValue(5)) picksint. - Passing

nullonly compiles forInteger(setValue(null)).

Common FAQs

Q: Why does List<int> not compile?

A: Generics require reference types; use List<Integer> or int[].

Q: Why does x == y sometimes work for small Integers?

A: Because of Integer caching (−128 to 127). Don’t rely on it—use .equals().

Q: I need performance but also collections—what can I do?

A: Use primitive arrays (int[]) or primitive streams (IntStream) to compute, then convert minimally when you must interact with object-based APIs.

Cheat Sheet

- Performance:

int>Integer - Nullability:

Integer - Collections/Generics:

Integer - Equality:

intuses==;Integeruse.equals()for values - Hot loops / big data: prefer

int/int[] - Optional numeric:

IntegerorOptionalInt(for primitives)

Mini Example: Mixing Both Correctly

class Scoreboard {

private final Map<Integer, String> playerById = new HashMap<>(); // needs Integer

private int totalScore = 0; // fast primitive

void addScore(int playerId, int score) {

totalScore += score; // primitive math

playerById.put(playerId, "Player-" + playerId);

}

Integer findPlayer(Integer playerId) {

// Accepts null safely; returns null if absent

return (playerId == null) ? null : playerId;

}

}

Final Guidance

- Default to

intfor computation and tight loops. - Choose

Integerfor nullability and object-centric APIs (Collections, Generics, frameworks). - Watch for NPE from unboxing and avoid boxing churn in performance-sensitive code.

- Use

.equals()for comparingIntegervalues; not==.

Recent Comments